Table of Contents

- The taki Cluster

- CPU, GPU, and Big Data Clusters in taki

- CPU Cluster

- GPU Cluster

- Big Data Cluster

- Types of Nodes

- Storage

The taki Cluster

The taki cluster is a heterogeneous cluster with equipment acquired in 2009, 2013, 2018, and 2021. Equipment from 2003, 2007, 2008, 2009, 2010, and 2013 has been retired. The equipment currently in service in taki can be summarized as

- HPCF2021:

- 18 compute nodes, each with two Intel Cascade Lake CPUs

- 2 CPUs each with 24 cores

- Hyperthreading disabled, so a total of 48 threads per node

- Information about the Intel Cascade Lake CPUs can be found here

- 18 compute nodes, each with two Intel Cascade Lake CPUs

- HPCF2018:

- 50 compute and 2 develop nodes, each with two Intel Skylake CPUs

- 2 CPUs each with 18 cores

- Hyperthreading disabled, so a total of 36 threads per node

- Information about the Intel Skylake CPUs can be found here

- 1 GPU node with 4 NVIDIA Tesla V100 GPUs connected by NVLink

- 8 Big Data nodes, each two Skylake CPUs and 48 TB disk space — The Big Data nodes have been folded into the compute notes, increasing their number from 42 to 50.

- 2 login/user nodes, 1 management node

- 50 compute and 2 develop nodes, each with two Intel Skylake CPUs

- HPCF2013: The 2013 nodes have been shut down in August 2023.

- 49 compute, 2 develop, and 1 interactive nodes, each containing two 8-core Intel Ivy Bridge CPUs

- 2 CPUs each with 8 cores

- Hyperthreading disabled, so a total of 16 threads per node

- Information about the Intel Ivy Bridge CPUs can be found here

- 18 CPU/GPU nodes, hybrid nodes with 2 CPUs and 2 GPUs

- 49 compute, 2 develop, and 1 interactive nodes, each containing two 8-core Intel Ivy Bridge CPUs

- HPCF2009: The 2009 nodes have been shut down in October 2020.

- 82 compute and 2 develop nodes, each two 4-core Intel Nehalem CPUs

- 2 CPUs each with 4 cores

- Hyperthreading disabled, so a total of 8 threads per node

- Information about the Intel Nehalem CPUs can be found here

- 82 compute and 2 develop nodes, each two 4-core Intel Nehalem CPUs

All nodes run the CentOS7 version of the Linux operating system. Only the the bash shell is supported.

Some photos of the cluster follow here. For a full high resolution image, please click each image.

|

|

|

|

|

|

|

|

|

|

|

|

CPU, GPU, and Big Data Clusters in taki

For access, taki is divided into three clusters of distinct types, with components from several dates of purchases. This structure is reflected by the tabs on top of this webpage.

- CPU Cluster:

- HPCF2021: 18 compute nodes, each with two 24-core Intel Skylake CPUs and 192 GB of memory,

- HPCF2018: 50 compute and 2 develop nodes, each with two 18-core Intel Skylake CPUs and 384 GB of memory;

- GPU Cluster:

- HPCF2018: 1 GPU node containing 4 NVIDIA Tesla V100 GPUs connected by NVLink and 2 Intel Skylake CPUs;

- Other Nodes:

- 2 login/user nodes (taki-usr1, taki-usr2) identical to 2018 CPU nodes,

- 1 management node.

Each of the three clusters requires more details for complete documentation, which is provided in the following three lists.

CPU Cluster

The CPU cluster on taki is specified as follows.

- HPCF2021 [cpu2021 partition]: The 18 compute nodes total to 864 cores and over 3 TB of pooled memory.

- 18 compute nodes (

cnode[051-068]), each with two 24-core Intel Xeon Gold 6240R Skylake CPUs (2.4 GHz clock speed, 35.75 MB L3 cache, 6 memory channels, 165 W power), for a total of 48 cores per node, - Each node has 192 GB of memory (12 x 16 GB DDR4 at 2933 MT/s) for a total of 4 GB per core and a 480 GB SSD disk,

- 18 compute nodes (

- HPCF2018 [high_mem, develop partitions]: The 50 compute nodes total to 1800 cores and over 19 TB of pooled memory.

- 50 compute nodes (

bdnode[001-008],cnode[002-029,031-044]) and 2 develop nodes (cnode[001,030]), each with two 18-core Intel Xeon Gold 6140 Skylake CPUs (2.3 GHz clock speed, 24.75 MB L3 cache, 6 memory channels, 140 W power), for a total of 36 cores per node, - Each node has 384 GB of memory (12 x 32 GB DDR4 at 2666 MT/s) for a total of 10.6 GB per core and a 120 GB SSD disk,

- The nodes are connected by a network of four 36-port EDR (Enhanced Data Rate) InfiniBand switches (100 Gb/s bandwidth, 90 ns latency);

- 50 compute nodes (

- HPCF2013 [batch, develop partitions]: The 49 compute nodes total to 784 cores and over 3 TB of pooled memory. The 2013 nodes have been shut down in August 2023.

- 49 compute nodes (

cnode[102-133,135-151]), 2 develop nodes (cnode[101,134]), and 1 interactive node (inter101), each with two 8-core Intel E5-2650v2 Ivy Bridge CPUs (2.6 GHz clock speed, 20 MB L3 cache, 4 memory channels), for a total of 16 cores per node, - Each node has 64 GB of memory (8 x 8 GB DDR3) for a total of 4 GB per core and 500 GB of local hard drive,

- The nodes are connected by a QDR (quad-data rate) InfiniBand switch;

- One interactive node

inter101, identical to the other nodes but with 128 GB (8 x 16 GB DDR3) memory.

- 49 compute nodes (

- HPCF2009 [batch, develop partitions]: The 82 compute nodes total to 656 cores and nearly 2 TB of pooled memory. The 2009 nodes have been shut down in October 2020.

- 82 compute nodes (

cnode[202-242,244-284]) and 2 develop nodes (cnode[201,243]), each with two 4-core Intel Nehalem X5550 CPUs (2.6 GHz clock speed, 8 MB L3 cache, 3 memory channels), for a total of 8 cores per node, - Each node has 24 GB of memory (6 x 4 GB DDR3) for a total of 3 GB per core and a 120 GB local hard drive,

- The nodes are connected by a QDR (quad-data rate) InfiniBand switch.

- 82 compute nodes (

All above nodes are connected by a network of EDR (Enhanced Data Rate) InfiniBand switches (100 Gb/s bandwidth, 90 ns latency).

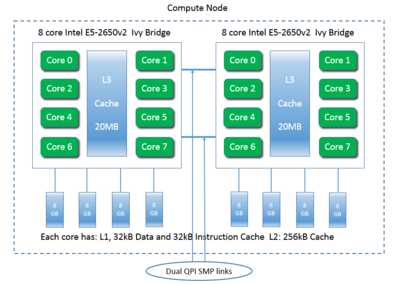

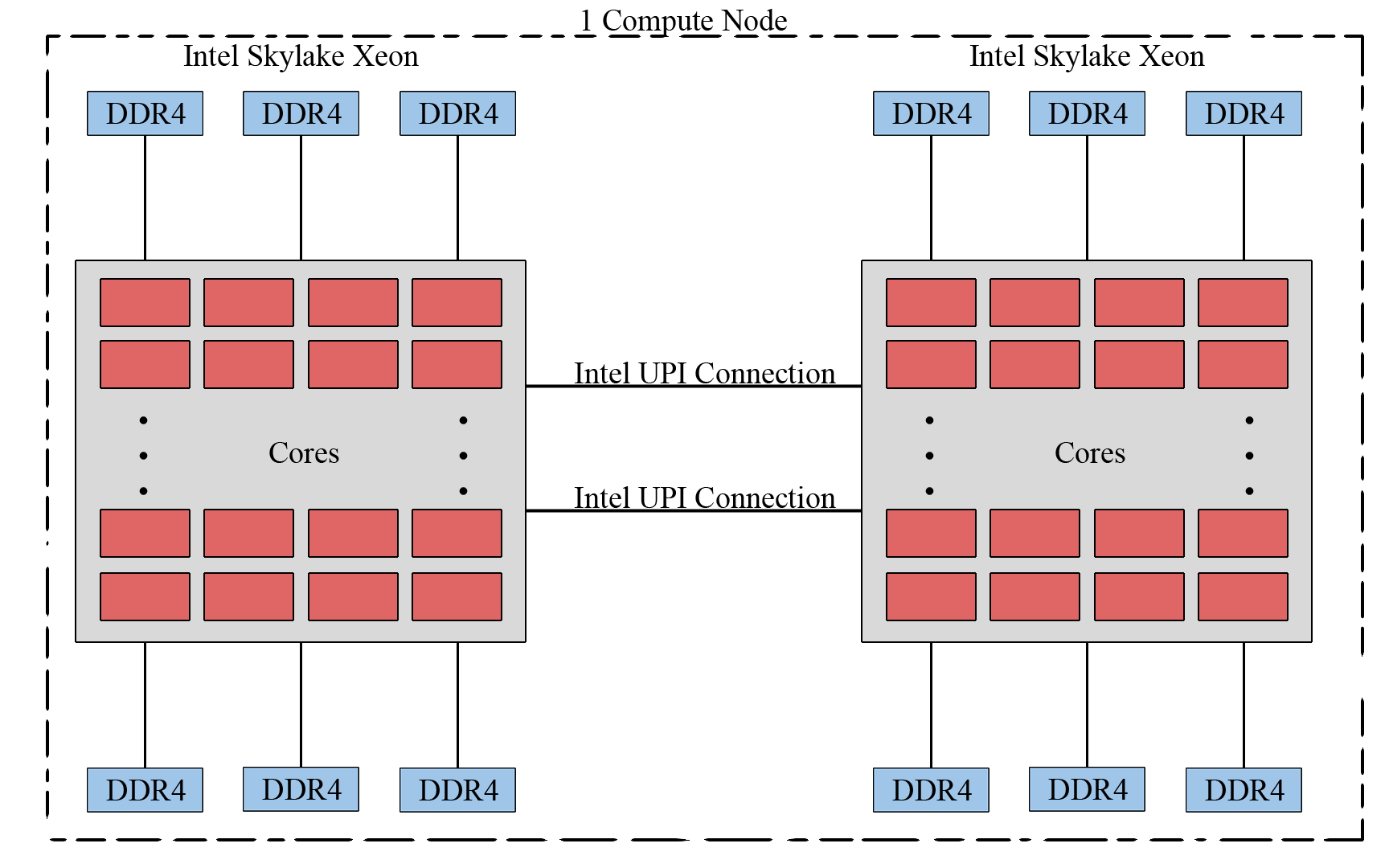

The following schematics show the architecture of the CPU nodes for HPCF2013 and HPCF2018.

The first schematic shows an HPCF2013 CPU node, which consists of two eight-core 2.6 GHz Intel E5-2650v2 Ivy Bridge CPUs. Each core of each CPU has dedicated 32 kB of L1 and 256 kB of L2 cache. All cores of each CPU share 20 MB of L3 cache. The 64 GB of the node’s memory is the combination of eight 8 GB DIMMs, four of which are connected to each CPU. The two CPUs of a node are connected to each other by two QPI (quick path interconnect) links. Nodes are connected by a quad-data rate InfiniBand interconnect.

The layout of an HPCF2018 CPU node is shown in the second schematic. The node consists of two 2.3 GHz 18-core Intel Xeon Gold 6140 Skylake CPUs, connected by UPI. The node has a total 384 GB of memory, consisting of six 32 GB DDR4s connected to each CPU. Nodes are connected by EDR (Enhanced Data Rate) InfiniBand switches.

|

|

GPU Cluster

The GPU cluster is specified as follows. The description of the GPU cluster page provides more information.

- HPCF2018 [gpu2018 partition]:

- 1 GPU node (

gpunode001) containing four NVIDIA Tesla V100 GPUs (5120 computational cores over 84 SMs, 16 GB onboard memory) connected by NVLink and two 18-core Intel Skylake CPUs, - The node has 384 GB of memory (12 x 32 GB DDR4 at 2666 MT/s) and a 120 GB SSD disk,

- 1 GPU node (

- HPCF2013 [gpu partition]: The 2013 nodes have been shut down in August 2023.

- 18 hybrid CPU/GPU nodes (

gpunode[101-118]), each two NVIDIA K20 GPUs (2496 computational cores over 13 SMs, 4 GB onboard memory) and two 8-core Intel E5-2650v2 Ivy Bridge CPUs (2.6 GHz clock speed, 20 MB L3 cache, 4 memory channels), - Each node has 64 GB of memory (8 x 8 GB DDR3) and 500 GB of local hard drive,

- The nodes are connected by a QDR (quad-data rate) InfiniBand switch;

- 18 hybrid CPU/GPU nodes (

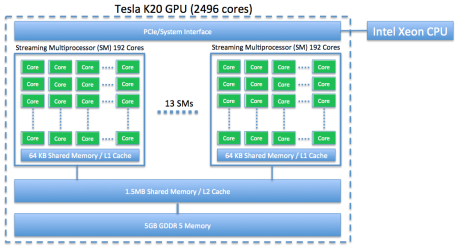

The following schematics show the architectures of the two node types contained in the GPU cluster.

The Tesla K20, shown in the first schematic below, contains 2496 cores over 13 SMs and 5 GB of onboard memory. The second schematic shows how the GPUs are connected in the hybrid CPU/GPU node for HPCF2013. The node contains two Tesla K20 GPUs, each connected to a separate Ivy Bridge CPU. While the CPUs are connected by QPI, there is no connection between the GPUs. In order for the GPUs to communicate with each other, any shared information must pass between the CPUs. Therefore the GPUs cannot operate independently of the CPUs.

|

|

The architecture of the newer Tesla V100 GPU is shown in the first schematic below. It contains 5120 cores over 84 SMs and 16 GB of onboard memory. In addition, each V100 contains 640 tensor cores. The four Tesla V100 GPUs contained in the GPU node for HPCF2018 are arranged in the configuration shown in the second schematic. Notice that all GPUs are tightly connected through NVLink, allowing information to be shared between them without passing through the CPUs. The node contains two Skylake CPUs that are connected to each other by UPI. A PCIe switch is used to connect the GPUs to the first CPU [Han, Xu, and Dandapanthula 2017] [Xu, Han, and Ta 2019]. Given the relative richness of the connections between GPUs, jobs run on this node should primarily use the GPUs rather than the CPUs.

|

|

Big Data Cluster

The Big Data cluster is specified as follows. The description of the Big Data cluster page provides more information. The nodes of the Big Data cluster have been folded into the 2018 CPU nodes in August 2023, increasing the number of CPU nodes from 42 to 50.

- HPCF2018:

- 8 Big Data nodes, each with two 18-core Intel Xeon Gold 6140 Skylake CPUs (2.3 GHz clock speed, 24.75 MB L3 cache, 6 memory channels, 140 W power), for a total of 36 cores per node,

- Each node has 384 GB of memory (12 x 32 GB DDR4 at 2666 MT/s) and 48 TB (12 x 4 TB) SATA hard disks,

- The nodes are connected by a 10 Gb/s Ethernet network.

Types of Nodes

The taki cluster contains several types of nodes that fall into four main categories for usage.

- Management node – There is one management node, which is reserved for administration of the cluster. It is not available to users.

- User nodes – Users work on these nodes directly. This is where users log in, access files, write and compile code, and submit jobs to the scheduler from to be run on the compute nodes. Furthermore, these are the only nodes that may be accessed via SSH/SCP from outside of the cluster.

- Compute nodes – These nodes are where the majority of computing on the cluster will take place. Users normally do not interact with these nodes directly. Instead jobs are created and submitted from the user nodes and a program called the scheduler decides which compute resources are available to run the job.In principle a user could connect to compute nodes directly from a user node by SSH (e.g. “ssh n84”). However, SSH access to the compute nodes is disabled to help maintain stability of the cluster. Compute nodes should instead be allocated by the scheduler. Advanced users should be very careful when doing things such as spawning child processes or threads – you must ensure that you are only using the resources allocated to you by the scheduler. It is best to contact HPCF user support if there are questions about how to set up a non-standard job on taki.

- Development nodes – These are special compute nodes which are dedicated to running code that is under development. This allows users to test their programs and not interfere with programs running in production. Programs are also limited to a short maximum run time on these nodes to make sure users don’t need to wait too long before a program will run. Development jobs are expected to be small in scale, but rerun frequently as you work on your code.The availability of two development nodes allows you to try several useful configurations: single core, several cores on one processor, several cores on multiple processors of one machine, all cores on multiple machines, etc.

- Interactive node – This node is available for interactive use. Those are jobs, with which you interact directly, that is, you enter a command and wait for the response. All other nodes use batch processing.

The taki cluster features several different computing environments. We ask that for serial CPU-only jobs, users should use the HPCF2013 nodes, since these have equivalent core-by-core performance as HPCF2018 and HPCF2021. Only properly parallelized code can take full advantage of the nodes with 36 cores in HPCF2018 or 48 cores in HPCF2021.

Storage

There are a few special storage systems attached to the clusters, in addition to the standard Unix filesystem. Here we describe the areas which are relevant to users.

Home directory

Each user has a home directory on the /home partition. Users can only store 500 MB of data in their home directory.

Research Storage

Users are also given space on this storage area which can be accessed from anywhere on the cluster. There is space in research storage available for user and group storage. These are accessible via symbolic links in users’ home directories. Note that this area is not backed up.

Scratch Space

All compute nodes have a local /scratch directory, and it is generally about 100 GB in size. Slurm creates a folder in this directory. Slurm sets ‘JOB_SCRATCH_DIR’ to the directory for the duration of the job. Users have access to this folder only for the duration of their job. After jobs are completed, this space is purged. This space is shared between all users, but your data is accessible only to you. This space is safer to use for jobs than the usual /tmp directory, since critical system processes also require use of /tmp.

Tmp Space

All nodes (including the user node) have a local /tmp directory and it is generally 40 GB in size. Any files in a machines /tmp directory are only visible on that machine and the system deletes them once in a while. Furthermore, the space is shared between all users. It is preferred that users make use of Scratch Space over /tmp whenever possible.

UMBC AFS Storage Access

Your AFS partition is the directory where your personal files are stored when you use the DoIT computer labs or the gl.umbc.edu login nodes. The UMBC-wide /afs can be accessed from the taki login nodes.